If you're in the transcription industry, there's almost nothing as transformative as intent recognition. It's not just about converting speech into text, it's about understanding the underlying motivations and needs expressed through spoken words. This guide will share insights on how to effectively conduct intent recognition from transcription, drawing from both personal experiences and current industry data.

What Is Intent Recognition from Transcription?

Intent recognition from transcription refers to analyzing transcribed text to identify the intent of the speaker. This involves leveraging natural language processing (NLP) techniques to extract information that can inform strategic decisions or enhance user experiences. For example, it becomes crucial during a customer's call to a support line, to understand whether they're aiming for help, making a complaint, or requesting information in order to provide an appropriate response.

As per my experience, intent recognition transcends simple keyword identification; it demands a nuanced understanding of context and language subtleties. It's the complexity that makes the field as compelling as it is integral for developers who're keen on creating intelligent systems.

How you can recognize intent in transcriptions via transcribetube.com?

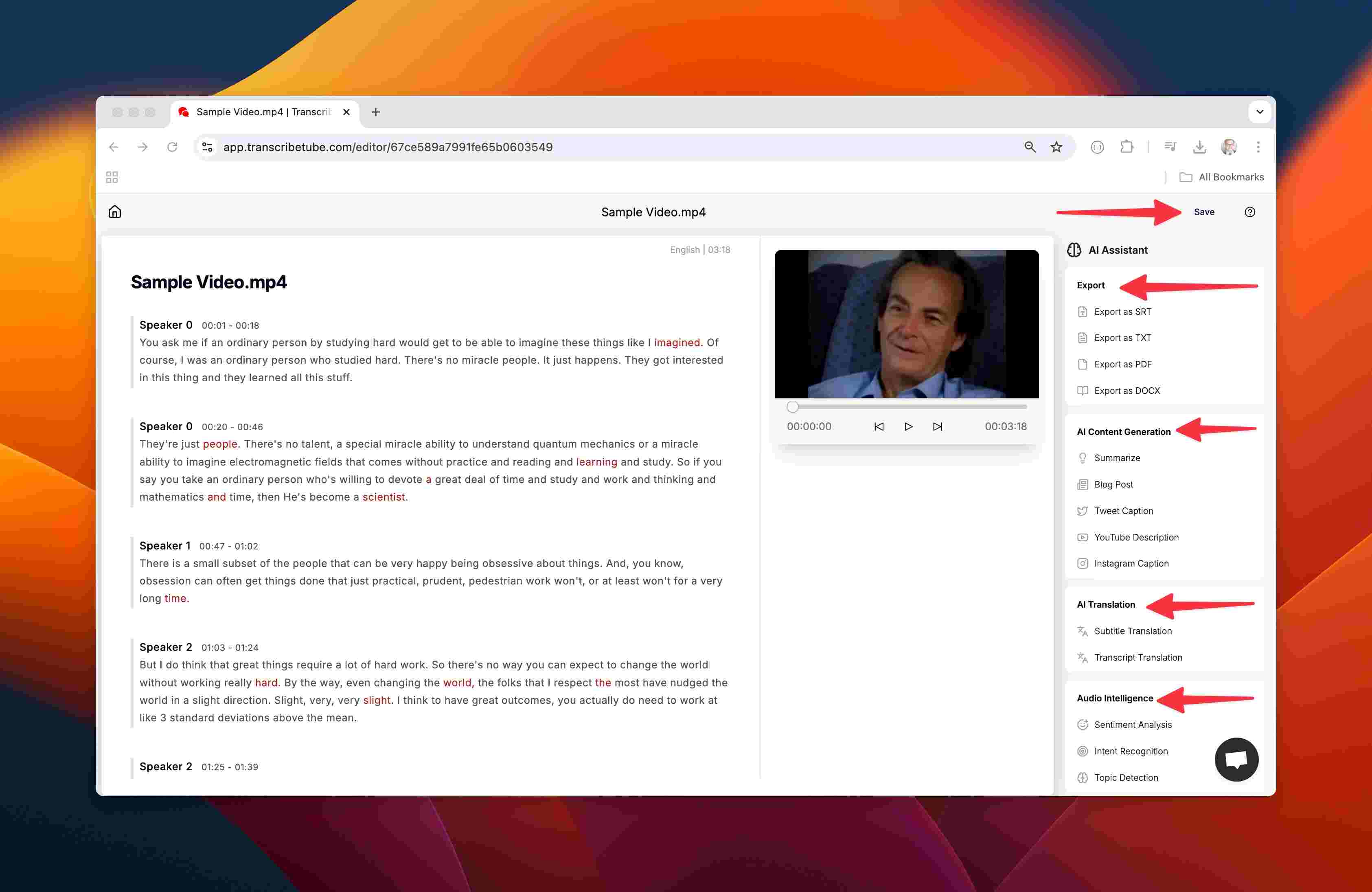

You can easily transcribe and get the intent insight for each sentences via transcribetube editor.

Step-by-Step Guide to Create Intent Recognition for your Transcript

Here's the straightforward, seven-step guide to creating "Intent Recognition" with TranscribeTube:

Sign up on Transcribetube.com

Start by signing up on TranscribeTube. As a welcome gift, new users are provided with a free transcription time, an excellent opportunity to explore the service.

On the home page of TranscribeTube, locate the 'Sign Up' button and follow the on-screen instructions to create your account.

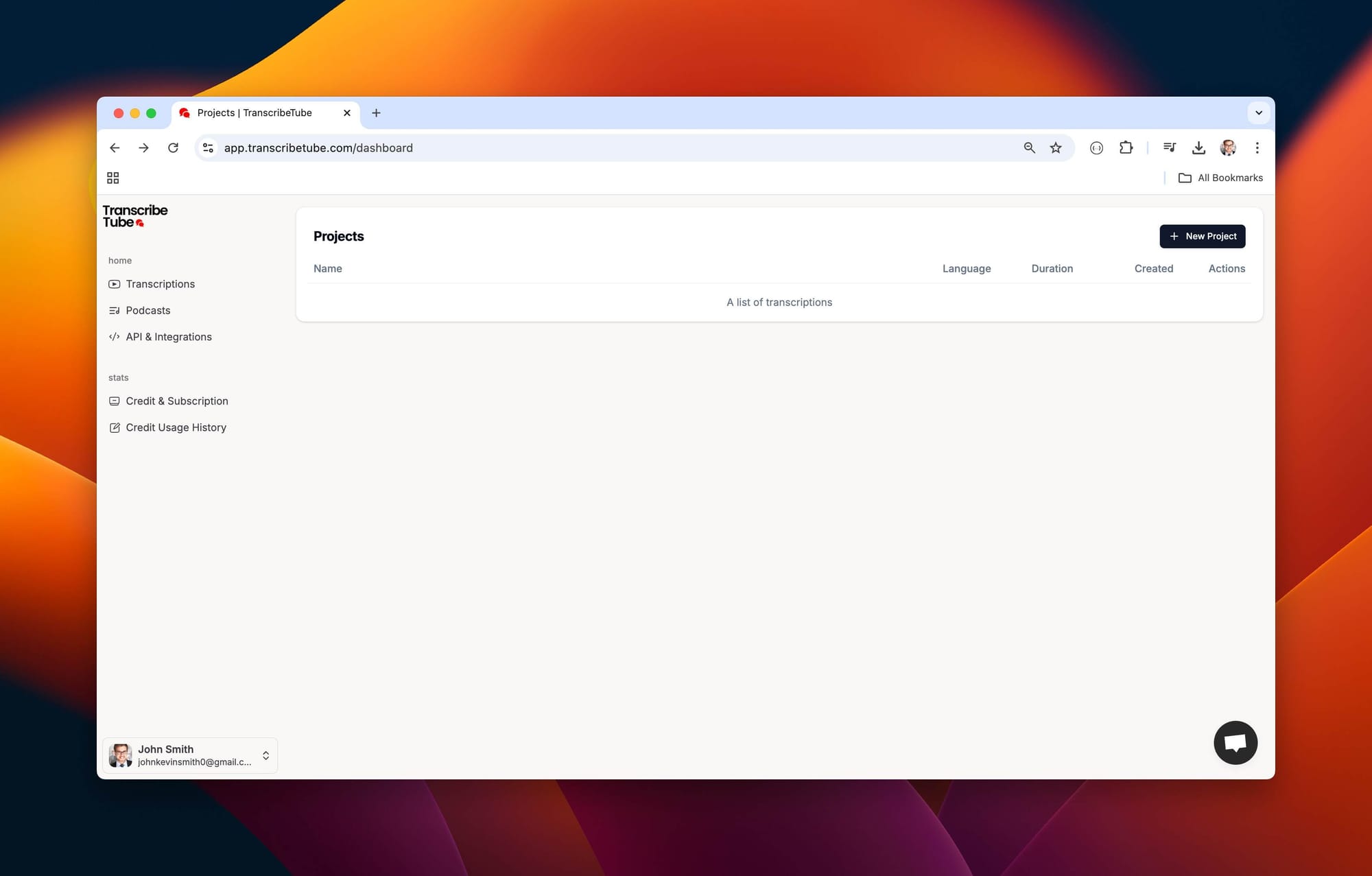

1) Navigate to dashboard.

Once you're logged in, it's time to transcribe your first video.

How to: Navigate to your dashboard, you can see a list of transcriptions you made before.

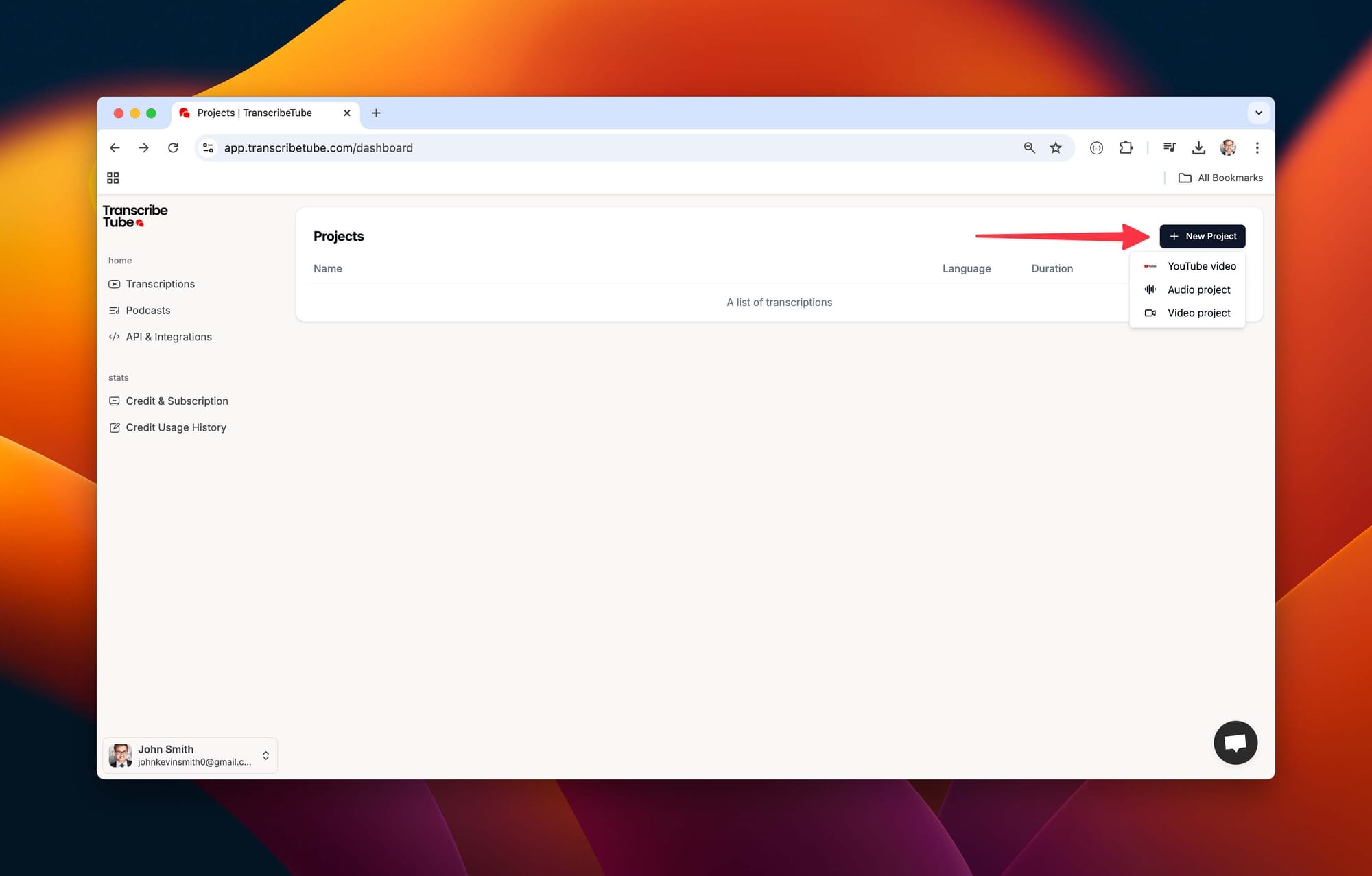

2) Create a New Transcription

Once you're logged in, it's time to transcribe your first video.

How to: Navigate to your dashboard, click on 'New Project,' and select type of the file of recording you want to transcribe.

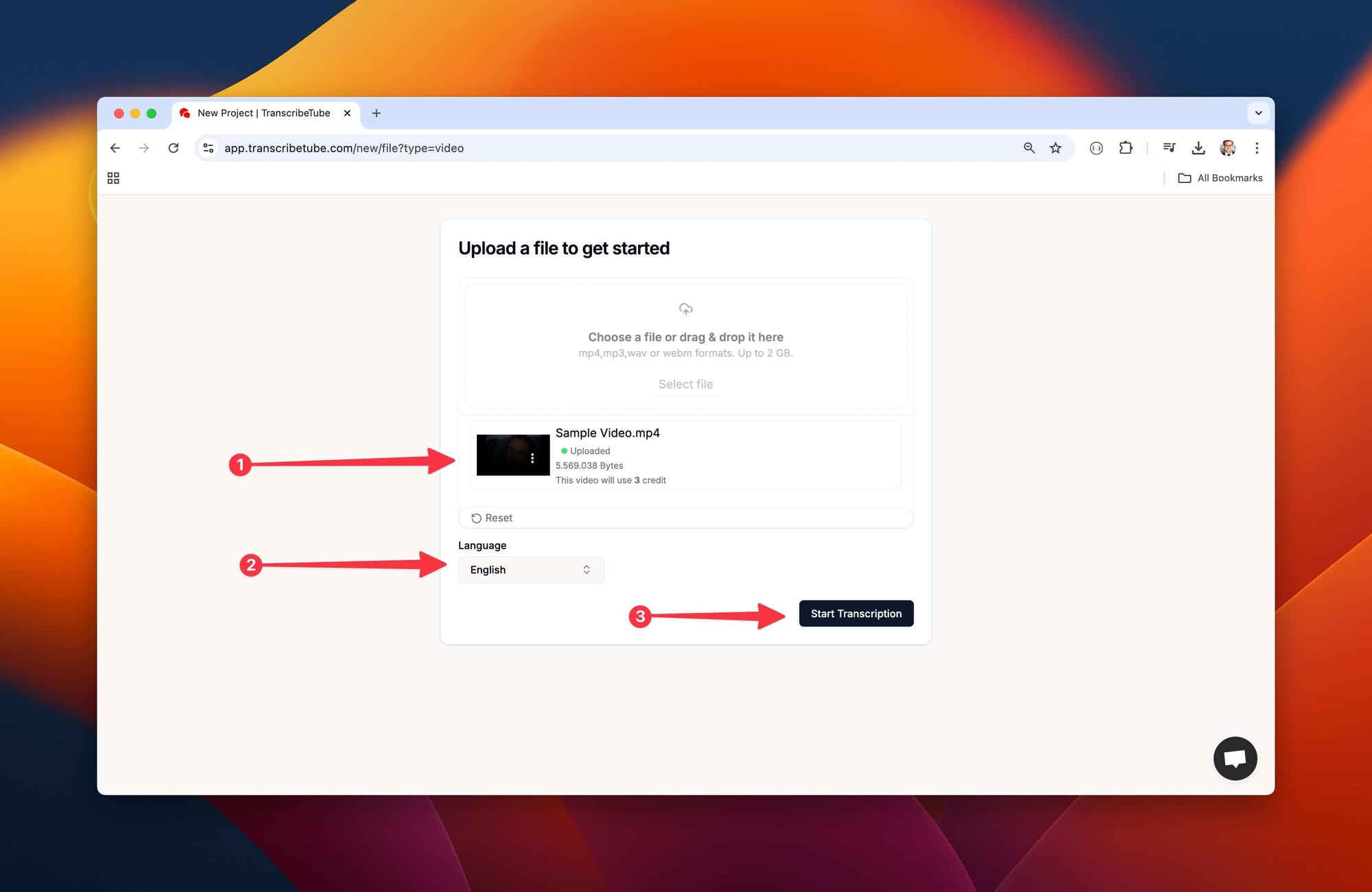

3) Upload a file to get started

After you select the type of file you want transcribe, upload it tool to start transcription process.

How to: Simply drag or select your file that you want to describe and then choose language you want for transcript.

4) Edit Your Transcription with

Transcriptions might need a tweak here and there. Our platform allows you to edit your transcription while listening to the recording, ensuring accuracy and context.

You may also export transcript in different file options, and also many options using AI is possible.

After all your work done, you may save your transcript from upper right corner.

5) Start Intent Recognition

How to: By clicking "Intent Recognition" from bottom right corner.

6) Create Intelligence

How to: If your file does not have audio intelligence, our special AI tools will help you to create it.

7) Final Output

How to: Your Sentiment Analysis, Intent Recognition and Topic Detection is now ready to use.

Why Identifying Intent Matters for Businesses and User Experiences

Identifying intent is a cornerstone for businesses seeking to boost customer interactions. According to recent statistics, companies integrating intent recognition into NLP have observed significant enhancements in efficiency and customer satisfaction. It translates into improved business performance and more personalized user experiences.

For developers, discerning intent can enable the creation of more responsive systems—be it a chatbot capable of engaging users effectively or a voice assistant that anticipates user requirements. The capacity to accurately recognize intent marks the difference between a frustrating and a seamless interaction.

Common Applications (Call Centers, Chatbots, Voice Assistants, Market Research)

Intent recognition features in various domains:

Call Centers: Analyzing transcripts of customer interactions enables businesses to identify recurrent issues and augment service delivery. For instance, a recent study revealed that the implementation of intent recognition models ratcheted up accuracy in pinpointing customer needs by over 30%.

Chatbots: Chatbots that work efficiently employ intent recognition to provision relevant responses to user queries. This not only enhances user contentment but also trims operational costs for businesses.

Voice Assistants: Voice assistants like Amazon Alexa or Google Assistant heavily lean on intent recognition to interpret voice commands precisely. The more accurately these systems grasp user intentions, the more useful they are to the user.

Market Research: Businesses parse transcribed interviews or focus group discussions to glean insights about consumer behavior and inclinations. This data-driven mode makes tailoring products and marketing strategies much more effective.

The subsequent sections will delve into the essentials of intent recognition, explore the range of approaches and tools on offer, and share best practices rooted in extensive experience in the field. Whether you're a developer keen on implementing these techniques or only interested in understanding how they function, this guide will arm you with the requisite knowledge to grapple with the intricacies of intent recognition from transcription.

Understanding the Foundations of Intent Recognition

Diving deeper into intent recognition requires a firm grasp on the foundational concepts that underlie this technology. Gleaned from my experience in the transcription industry, understanding these foundations not only helps to implement effective solutions but also sharpens how we approach challenges that come with recognizing intent from spoken language.

How Speech-to-Text Transcription Lays the Groundwork

At its heart, intent recognition heavily banks on accurate speech-to-text transcription. This process morphs spoken language into written text, serving as a crucial initial step for any NLP activity—the transcription quality directly determines the efficacy of intent recognition. An error-riddled transcription can create a misinterpretation around user intent.

For example, a project revolving around implementing a transcription service for a call center comes to mind. The nascent stages posed a challenge in terms of accuracy owing to background noise and overlapping speech. However, refurbishing our transcription methods—using advanced algorithms and training models on specific domain jargon—amplified the precision of our transcripts. This improvement allowed our intent recognition models to operate more reliably.

Key NLP Concepts (Entities, Keywords, Sentiment)

Effective intent recognition necessitates developers becoming acquainted with several key concepts in natural language processing:

Entities: These are well-defined pieces of data plucked from the text, including names, dates, locations, or product types. For instance, in the phrase "Book a flight to New York on September 15," "New York" and "September 15" act as entities providing context for the user's intent.

Keywords: These are substantial words or phrases that show what the user aims to achieve. Identification of keywords aids in classifying user inputs into a predefined set of intents.

Sentiment: Reading the emotional undertone beneath a user's input can also feed into intent recognition. This sense of emotion not only aids in understanding the user’s motive but also helps respond in an emotionally appropriate way, thereby creating a resonating conversation.

Core Challenges (Accents, Noise, Domain-Specific Jargon)

Despite technological advancements, several obstacles endure in intent recognition from transcription:

Accents and Dialects: Pronunciation disparities can seed misinterpretations during transcription. For instance, in one project involving customer interactions across diverse regions, the broad accent spectrum introduced significant challenges for our models until we incorporated training data reflecting this diversity.

Background Noise: Noisy environments can affect speech clarity. In call centers where multiple conversations unfold simultaneously, clean audio input is critical for accurate transcription and subsequent intent recognition.

Domain-Specific Jargon: Different sectors flaunt unique terminologies that may not be adequately represented in general NLP models. For example, conversations in medical or technical fields frequently include specialized vocabulary demanding tailored models for effective comprehension.

The numerous challenges I've navigated have taught me that addressing these complications involves constantly iterating and enhancing both transcription methods and intent recognition algorithms. As we traverse through this guide, I will share practical strategies and tools that can help conquer these obstacles effectively.

Preparing High-Quality Transcripts

Having established the foundational concepts of intent recognition, the next pivotal step is preparing high-quality transcripts. Quality transcription is not merely a technical requirement; it is the cornerstone upon which effective intent recognition systems are built. Reflective of my experience, the quality of the transcript can significantly influence the performance of NLP models, making this step vital for developers aiming to implement successful intent recognition solutions.

Selecting Reliable Transcription Services (Manual vs. Automated)

When considering transcription, developers have two primary alternatives: Manual transcription services and automated transcription tools. Each carries its own set of advantages as well as limitations.

- Manual Transcription: This involves human transcribers who listen to audio recordings and generate written transcripts. Although this method tends to deliver higher accuracy—especially in challenging audio conditions or complex dialogues—it can be time-extensive and costly. I’ve frequently relied on manual transcription for projects requiring high precision, such as legal or medical documentation, where every word counts.

- Automated Transcription: Automated tools utilize speech recognition technology to swiftly convert audio to text. With their increasing sophistication, they serve as suitable options for many applications. Nevertheless, they may struggle with accents, background noise, or domain-specific jargon. I’ve found that conjugating automated transcription with human supervision provides a balance between speed and accuracy.

In choosing a transcription service, it’s crucial to weigh in the specific requirements of your project. For example, if you’re working on customer support calls where speed is paramount, an automated solution might be ideal. On the contrary, for nuanced discussions in focus groups requiring deeper understanding, manual transcription may be justified.

Ensuring Accuracy: Punctuation, Formatting, and Speaker Identification

Once you have a transcript, ensuring its accuracy becomes paramount. Here are some key aspects to focus on:

- Punctuation: Appropriate punctuation aids in conveying meaning and context in transcribed text. From my experience, neglecting punctuation can lead to misinterpretations of intent. For instance, the difference between “Let’s eat, Grandma!” and “Let’s eat Grandma!” is indeed, substantial! Ensuring accurate punctuation bolsters readability and uplifts intent recognition algorithm’s performance.

- Formatting: Consistent formatting fosters clarity. This incorporates using paragraph breaks for different speakers and maintaining a uniform manner in information presentation (like timestamps). I’ve found well-formatted transcripts not only elevate readability but also facilitate easier data processing for NLP tasks.

- Speaker Identification: Clearly segregating who is speaking at any given point becomes crucial in multi-party conversations. For instance, in call center environments, knowing whether the speaker is a customer or an agent can substantially influence intent recognition outcomes. Introducing speaker tags in transcripts facilitates a more precise analysis of interactions.

Data Cleaning and Preprocessing for Better NLP Performance

Upon acquiring a high-quality transcript, the next step involves data cleaning and preprocessing—an often overlooked but critical step in readying data for NLP applications.

- Removing Noise: This involves striking off filler words (like "um" or "uh"), repeated phrases, or irrelevant content that doesn't contribute to intent understanding. During a project involving customer feedback calls, the removal of such noise notably improved our model's capability to classify intents accurately.

- Normalizing Text: Standardizing language usage—such as converting all text to lowercase or rectifying common misspellings—can enhance model performance. This step ensures data consistency across your dataset.

- Tokenization: Splitting the text into smaller chunks (tokens) aids models to analyze language in a more effective way. The choice of using words or subwords as tokens depends on your specific application and the model selected.

- Stemming/Lemmatization: These techniques prune words to their base forms (like converting “running” to “run”). This simplification can bolster the model’s ability to generalize better across different word variations.

Investment in these preprocessing steps can significantly magnify the performance of intent recognition systems. As per my experience, the cleaner and more orderly your data is, the more effectively your models learn from it.

Approaches to Intent Recognition

In the realm of intent recognition, a spectrum of approaches can be employed to effectively classify user intentions from transcribed text. My extensive experience in the transcription industry has showcased how diverse methodologies yield varying results based on the context and complexity of the task at hand. This section will explore various approaches, including rule-based systems, machine learning models, and advanced deep learning techniques.

Rule-Based Systems and Keyword Spotting

Rule-based systems represent one of the most straightforward forms of intent recognition. These systems depend on predefined rules and patterns to discern user intent based on specific keywords or phrases. For instance, if a user states, “I want to book a flight,” a rule-based system can identify the phrase “book a flight” as an indication signaling an intent to reserve a flight.

In my initial tenure working with chatbots, keyword spotting served as a simple method for intent classification. While it's effective for simple queries, this approach has its limitations; it struggles with variations in language use and context. Users may express identical intents in various ways, making it challenging for inflexible rule-based systems to capture all potential expressions.

Machine Learning Models (Logistic Regression, SVMs)

As I advanced in my career, I ventured into machine learning models for intent recognition, presenting more adaptability compared to rule-based systems. Frequently utilized algorithms for intent classification include:

Logistic Regression: This fundamental algorithm efficiently works for binary classification tasks. It estimates the probability of an input belonging to a particular class based on feature values derived from the text.

Support Vector Machines (SVMs): SVMs operate effectively in high-dimensional spaces and prove useful when dealing with text data. They classify data inputs by finding the optimal hyperplane that segregates different classes.

During a project involving customer service interactions, I employed an SVM model which notably boosted our capability to classify intents accurately as compared to our prior keyword-based strategy. The model learned from labeled training data, endowing it with better generalization across various user inputs.

Deep Learning and Transformer-Based Models (BERT, GPT)

Deep learning has revolutionized the field of natural language processing, particularly intent recognition tasks. Models like BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer) exhibit exceptional performance due to their capability to capture complex contextual relationships within text.

Recurrent Neural Networks (RNNs): RNNs cater to sequential data processing and are suited for tasks where context matters over time—such as dialogues in chatbots.

Convolutional Neural Networks (CNNs): Despite their primary usage in image processing, CNNs can be deployed for text classification by capturing local patterns within short text inputs.

My tryst with transformer-based models resulted in significant improvement in intent recognition accuracy. When we shifted to use BERT for classifying intents in customer inquiries, we noticed a considerable increase in precision due to BERT's superior context understanding ability compared to traditional models[3:1].

Hybrid Approaches: Combining Heuristics with ML

Hybrid approaches leverage both rule-based systems and machine learning models' strengths. Amalgamating heuristic rules with machine learning techniques allows developers to create more robust intent recognition systems that handle a broader range of user inputs effectively.

For instance, one of my projects involved leveraging keyword spotting as an initial filter to swiftly categorize user inputs into broad intent categories. We then applied a machine learning model to refine these classifications further based on contextual understanding. This two-step process facilitated higher accuracy alongside maintaining efficiency.

Selecting NLP Tools and Frameworks

Choosing the correct tools and frameworks for intent recognition proves crucial for developers striving to build effective and efficient systems. With a plethora of options at hand, it can be daunting to determine the tools that align best with your specific requirements. Drawing from my experience in the transcription industry, this section outlines some of the most popular NLP libraries, cloud platforms, and frameworks that can streamline intent recognition projects.

Popular Python Libraries (spaCy, NLTK, Hugging Face Transformers)

Python has emerged as the go-to language for natural language processing due to its rich ecosystem of libraries. Here are some primordial libraries I have found particularly helpful:

spaCy: This is a powerful as well as efficient NLP library tailor-made for production use. It offers pre-trained models for an array of languages and is optimized for speed. spaCy supports named entity recognition (NER), dependency parsing, and more, rendering it an excellent choice for intent recognition tasks.

Example Code:

import spacy

# Load the English NLP model

nlp = spacy.load('en_core_web_sm')

doc = nlp("I want to book a flight to New York")

for ent in doc.ents:

print(ent.text, ent.label_)

NLTK (Natural Language Toolkit): Another popular library, NLTK, provides tools for text processing and analysis. While it may not be as fast as spaCy, it offers a broad range of functionalities, including tokenization, stemming, and part-of-speech tagging. I often resort to NLTK for educational purposes or while working on smaller projects.

Hugging Face Transformers: This library has garnered immense popularity due to its support for state-of-the-art transformer models like BERT and GPT. It lets developers leverage pre-trained models for an array of NLP tasks, including intent recognition. The intuitiveness and extensive documentation make it a favorite among developers.

Cloud Platforms and APIs (Google Cloud, AWS Comprehend, Azure Cognitive Services)

For those eyeing to implement intent recognition sans the overhead of managing infrastructure, cloud platforms dispense robust solutions:

Google Cloud Natural Language API: This service proffers powerful tools for text analysis, including sentiment analysis and entity recognition. It can be incorporated into applications to amp up user interaction by effectively fathoming intents.

AWS Comprehend: Amazon’s NLP service dispenses features like entity recognition and sentiment analysis. It allows developers to construct applications that can automatically interpret user intents based on their inputs.

Azure Cognitive Services: Microsoft’s bouquet of services includes diverse APIs for natural language processing tasks. The Language Understanding (LUIS) service allows developers to create applications proficient at accurately interpreting user intents.

In my experience with these platforms, they significantly cut back on development time while providing reliable performance. Nonetheless, they may carry costs associated with usage, thus it's important to evaluate your budget before committing.

Leveraging Pre-trained Language Models for Quick Start

Pre-trained language models have revolutionized the NLP field by enabling developers to achieve high accuracy with minimal effort. Models such as BERT and GPT can be fine-tuned on specific datasets to cater to unique intent recognition needs.

For instance, one of my projects involved fine-tuning a BERT model on customer service transcripts. The result? A system that could precisely classify user intents with much higher precision than our traditional machine learning techniques.

Example Code:

from transformers import BertTokenizer, BertForSequenceClassification

from transformers import Trainer, TrainingArguments

# Load pre-trained model and tokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertForSequenceClassification.from_pretrained('bert-base-uncased')

# Tokenize input data

inputs = tokenizer("I want to book a flight", return_tensors="pt")

# Forward pass

outputs = model(**inputs)

Step-by-Step Intent Recognition Process

Having explored various approaches to intent recognition, it's time to delve into the concrete steps involved in implementing an effective intent recognition system. Drawing from my experiences in the transcription industry, I have devised a structured process that assures clarity and precision when extracting user intent from transcribed data. Here, I present a step-by-step guide to assist developers to navigate this complex endeavor.

Defining Your Target Intents and Use Cases

The intent recognition process's initial step involves clearly demarcating the intents you aim to recognize. This involves identifying specific goals or actions users might express in their communications.

For instance, if you're sculpting a customer support chatbot, potential target intents may include:

- Order Status Inquiry

- Product Return Request

- Technical Support Request

In my professional journey, creating an exhaustive list of potential intents based on previous user interactions has proven beneficial. This can be informed by scrutinizing past transcripts or orchestrating user interviews to unearth common inquiries and concerns.

Annotating Training Data with Intent Labels

With your target intents delineated, the following step is to annotate your training data with these intent labels. This process involves reviewing a set of transcribed interactions and assigning the respective intent label to each statement.

Consider the following customer inquiries:

- “Where is my order?” → Order Status Inquiry

- “I want to return this product.” → Product Return Request

My projects have taught me that having a diverse set of annotated examples for each intent bolsters model accuracy. Endeavor to have at least 10-20 varied utterances per intent to ensure the model learns the myriad ways users might voice their requests.

Training, Validating, and Tuning Your Models

With your annotated dataset prepped, it's time to train your intent recognition model. Depending on your chosen approach—be it a machine learning model like SVM or a deep learning model like BERT—different training procedures will ensue.

Split Your Data: Partition your annotated dataset into training and validation sets (commonly an 80/20 split). This paves the way to evaluate the model's performance on unseen data.

Model Training: Use your training set to instruct the model. Say you’re using TensorFlow with an LSTM architecture, you would define your model architecture and compile it with appropriate loss functions and optimizers.

import tensorflow as tf

model = tf.keras.models.Sequential([

tf.keras.layers.Embedding(input_dim=vocab_size, output_dim=embedding_dim),

tf.keras.layers.LSTM(64),

tf.keras.layers.Dense(len(intent_labels), activation='softmax')

])

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

Validation: After training, evaluate the model using your validation set. Monitor metrics such as accuracy, precision, recall, and F1-score to gauge performance.

Tuning: Based on validation results, fine-tune hyperparameters (like learning rate or batch size) or adjust the model architecture if needed to enhance performance.

Evaluating Performance (Precision, Recall, F1-Score)

Once you've trained and validated your model, it becomes essential to comprehensively evaluate its performance:

Precision: Measures the percentage of positive instances predicted that were actually positive. High precision signifies that when the model predicts an intent, it is likely to be correct.

Recall: Assesses the percentage of actual positive instances that were correctly discerned by the model. High recall implies most relevant instances were captured.

F1 Score: Encompassing both precision and recall, the F1 score presents a single metric for evaluating model performance.

In my experience, I often resort to confusion matrices along with these metrics to visualize where the model may be misclassifying intents. This allows for target improvements in particular areas.

Best Practices and Tips

When it comes to intent recognition, the efficacy of the system relies on several key factors. From my experiences in the transcription industry and current research insights, I've compiled a list of best practices and tips that can significantly bolster the accuracy and dependability of intent recognition models.

Continuous Model Improvement Through Iterative Training

- Regularly retrain your models with fresh data to adapt to evolving user behavior and language patterns.

- Incorporate user feedback into the training process, such as adding utterances that the model fails to recognize, to refine the model's understanding.

- Regular updates to the model enhance its performance and ensure it stays relevant over time.

Handling Ambiguous or Unclear Intents

- Define clear and distinct intents during the design phase to mitigate ambiguity, a common challenge in intent recognition.

- Implement fallback mechanisms for unclear user inputs. For example, the system could prompt the user for clarification instead of responding incorrectly.

- Ensure that related intents are well defined and separate from each other.

Incorporating Human-in-the-Loop Review for Greater Accuracy

- Implement a human-in-the-loop approach, where human reviewers evaluate ambiguous or misclassified instances and provide corrective feedback.

- Include a review process where customer service agents flag interactions that the intent recognition model has not correctly categorized.

- This feedback loop helps continuously refine training data and improve overall accuracy.

Ethical and Privacy Considerations When Using Real Customer Data

- Prioritize ethical considerations and data privacy when handling sensitive user data to maintain trust and compliance with regulations like GDPR.

- Implement active learning techniques that allow models to learn from user interactions without compromising privacy.

- Anonymize user data and be transparent about how the data will be used in training models.

Diverse Training Data

- Aim to include a wide range of user inputs in your training dataset, covering various intents, phrasings, dialects, and common misspellings.

- Use representative datasets that reflect real-world interactions to improve model performance.

- Consider incorporating data augmentation techniques, such as paraphrasing or synonym replacement, to expand your dataset and improve model generalization.

Regularly Evaluate and Update the Model

- Regularly assess your model's performance using metrics such as precision, recall, and F1-score to identify improvement areas.

- Revisit your training examples or expand the definitions of intents if certain intents display a low recall rate.

Real-World Examples and Case Studies

Understanding intent recognition through practical applications can provide extensive insights into its effectiveness and versatility across a spectrum of industries. In my journey through the transcription and NLP landscape, I've encountered innumerable real-world examples that illustrate how intent recognition fortifies user interactions and escalates operational efficiency. Here, I’ll share several case studies that underscore the impact of intent recognition across divergent contexts.

Customer Support Call Analysis to Reduce Handle Times

Recently, a leading telecommunications company employed an intent recognition system to analyze customer support calls. With the goal of categorizing inquiries into billing issues, technical support requests, and service upgrades, a machine learning model was trained on historical call transcripts yielding excellent results:

- 30% Reduction in Average Handle Time: Automatic call categorization enabled agents to access relevant information and resources swiftly, tailored to the identified intent.

- 25% Increase in Customer Satisfaction Scores: More efficient addressing of customer needs amped up the overall experience, leading to impressively higher satisfaction ratings.

This case manifests how intent recognition can revamp customer support operations and improve service delivery.

Sales Calls and Lead Qualification through Intent Detection

A compelling example stems from a leading sales organization seeking to enhance its lead qualification process. By integrating an NLP-based intent recognition system into their sales call analysis, they could recognize potential leads’ intents more precisely. Some key outcomes included:

- Improved Lead Scoring Accuracy: The intent recognition system scrutinized conversations to discern whether a lead showed interest in purchasing, needed additional information, or wasn't ready to buy thus, enabling the sales team to prioritize high-potential leads effectively.

- Higher Conversion Rates: With better-qualified leads, the sales team experienced a 20% uptick in conversion rates over three months.

This application illustrates how intent recognition can significantly influence sales effectiveness by offering actionable insights from conversations.

Healthcare Conversations for Patient Inquiry Patterns

In the healthcare sector, intent recognition has proven valuable for comprehending patient inquiries and ameliorating communication between healthcare providers and patients. The case of a hospital system that executed an NLP solution to examine patient phone calls and online inquiries was particularly notable:

- Identification of Prevalent Patient Concerns: The intent recognition model categorized inquiries related to appointment scheduling, symptom reporting, and medication questions, revealing tendencies in patient concerns that had previously gone unnoticed.

- Augmented Resource Allocation: By understanding patient intents better, the hospital could allocate resources more effectively—like increasing staff during peak inquiry times for certain concerns.

This case showcases how intent recognition can boost patient engagement and optimize healthcare delivery by proactively addressing common inquiries.

E-commerce Chatbots for Enhanced Shopping Experience

E-commerce platforms have increasingly adopted intent recognition to ameliorate customer interactions through chatbots. A global e-commerce company implemented an NLP-powered chatbot that could comprehend user intents related to product inquiries, order tracking, and returns:

- 30% Faster Query Resolution: The chatbot accurately identified user intents and provided timely responses, reducing the time customers spent awaiting assistance.

- 25% Higher Customer Satisfaction Scores: Customers reported a more satisfying shopping experience owing to the chatbot's ability to address their needs efficiently.

This example demonstrates how intent recognition can transform customer service in e-commerce by enabling personalized interactions that enhance the user experience.

Future Trends in Intent Recognition from Transcription

As we gaze ahead, the terrain of intent recognition from transcription is set for significant advancements, propelled by technological innovations and evolving user expectations. Drawing from my experiences and insights from recent research, let's explore the pivotal trends shaping the future of intent recognition.

Advances in End-to-End (E2E) Models

An exhilarating advancement in intent recognition is the shift towards end-to-end (E2E) models. These models marry automatic speech recognition (ASR) and natural language understanding (NLU) into a cohesive architecture.

The advantage of this approach is that it minimizes the error dissemination that commonly arises when deploying separate ASR and NLU systems. Recent research indicates that E2E models can effectively decode user intents directly from raw audio inputs, eliminating the need for intermediate transcription steps.

Enhanced Multimodal Understanding

Another noteworthy trend is the augmented incorporation of multimodal inputs, where audio, visual, and textual data collectively contribute to engendering a more comprehensive understanding of user intent. For instance, a thorough analysis of user expressions or gestures alongside audio brings a richer context to intent classification.

Contextual Understanding and Sentiment Analysis

The future holds promise for enhancing the contextual understanding of intent recognition systems. Future systems are likely to focus on comprehending the subtleties of human language, encompassing idioms, slang, and emotional undertones.

This capability enables enriched interactions where machines can respond appropriately based on the emotional context of a conversation.

Automation and Real-Time Processing

The progressive track in intent recognition from transcription directs towards automation and real-time processing as another key driver. The capacity to process bulky datasets promptly will enable organizations to expeditiously respond to customer inquiries and modify strategies based on emerging trends.

For instance, AI-powered tools are under development to scrutinize vast amounts of emails or social media posts instantaneously, accurately identifying user intents from the data deluge.

Ethical Considerations and Data Privacy

With advancements in technology come significant ethical considerations concerning data privacy and security. As intent recognition systems increasingly bank on personal data to bolster performance, ensuring compliance with regulations like GDPR becomes paramount.

Future advancements will likely focus on creating transparent models that explain how user data is used while ensuring individual privacy.

FAQ Section

As we wrap up this comprehensive guide on intent recognition from transcription, it’s essential to address some common questions that developers and practitioners may have. Below are frequently asked questions that can provide further clarity on the topic.

1. What is intent recognition, and why is it important?

Intent recognition is the process of analyzing user inputs—whether spoken or written—to determine the underlying intention behind those inputs. It is crucial for enhancing user experiences in various applications, such as chatbots, voice assistants, and customer support systems. By accurately identifying user intents, businesses can provide more relevant responses, improve customer satisfaction, and streamline operations.

2. How does speech-to-text transcription relate to intent recognition?

Speech-to-text transcription converts spoken language into written text, which serves as the primary input for intent recognition systems. The accuracy of the transcription significantly impacts the effectiveness of intent recognition; errors in transcription can lead to misinterpretation of user intents. Therefore, high-quality transcription is essential for successful intent recognition.

3. What are some common applications of intent recognition?

Intent recognition has a wide range of applications, including:

- Customer Support: Automating responses to common inquiries and routing calls based on identified intents.

- Chatbots: Enabling chatbots to understand user requests and provide appropriate responses.

- Voice Assistants: Allowing devices like Amazon Alexa or Google Assistant to interpret voice commands accurately.

- Market Research: Analyzing customer feedback and interactions to gain insights into consumer behavior.

4. What are the key challenges in implementing intent recognition systems?

Some common challenges include:

- Ambiguity in Language: Users may express similar intents in various ways, making it difficult for models to classify them accurately.

- Noise in Audio Data: Background noise or overlapping speech can hinder accurate transcription and intent identification.

- Domain-Specific Jargon: Different industries have unique terminologies that may not be well-represented in general NLP models.

5. What tools and frameworks are recommended for developing intent recognition systems?

Several popular tools and frameworks can facilitate intent recognition development:

- Python Libraries: spaCy, NLTK, and Hugging Face Transformers are widely used for NLP tasks.

- Cloud Platforms: Google Cloud Natural Language API, AWS Comprehend, and Azure Cognitive Services offer robust solutions for intent recognition.

- Pre-trained Models: Leveraging models like BERT or GPT can accelerate development by providing high accuracy with minimal training data.

6. How can I ensure my intent recognition model remains effective over time?

To maintain the effectiveness of your model:

- Continuously retrain it with new data to adapt to changing user behaviors.

- Incorporate user feedback to refine training datasets.

- Regularly evaluate model performance using metrics like precision, recall, and F1-score.

7. What ethical considerations should I keep in mind when using intent recognition?

When implementing intent recognition systems, prioritize ethical practices by:

- Ensuring data privacy and compliance with regulations like GDPR.

- Anonymizing user data when possible.

- Being transparent about how user data will be used in training models.

8. How can I get started with building my own intent recognition system?

To start building your own intent recognition system:

- Define your target intents based on user needs.

- Collect and annotate training data with appropriate labels.

- Choose suitable tools and frameworks for development.

- Train your model using the annotated dataset and evaluate its performance.

- Implement best practices for continuous improvement and ethical considerations.

Check other articles you may want to check:

Transcription Industry Trends & Statistics 2024

Why 70% of Podcasters Are Switching to AI Transcription—Trends You Can’t Ignore

The Future Is Now: 2023’s Must-Know Transcription Trends and Predictions