A pivotal element in effective transcription analysis is topic detection—a process involving identifying key themes or subjects from audio to text transcripts. Recognizing these topics allows you to:

- Streamlined Content Organization: By grouping related topics together, you could gain a structured understanding of potentially diverse data sets. This in turn equips you to efficiently process large volumes of data, accelerate key theme tracking and save valuable time during analysis.

- Enhanced Searchability: Main topics, once identified within a transcript, can be tagged. This organized tagging provides users with improved searchability, facilitating their quest for relevant information and enabling quick retrievals. This could be particularly useful when navigating through large data sets or multiple transcripts.

- Actionable Insights: Pinpointed key topics in transcriptions can lead to significantly actionable insights. For example, analyzing call center interactions could reveal recurring topics and help understand customer pain points. This understanding can enhance service delivery or be used to create effective promotional material targeted at potential listeners.

As we delve deeper, it will become clear that topic detection is multifaceted and can sometimes be complex. It's key to remember that depending on your data set's nature and required specifics, different approaches or tools may be necessary.

Approaches to Topic Detection

There are several methodologies to approach to topic detection, each with its merits and considerations some of them are:

- Keyword Identification: This is a straightforward approach where you identify common or standout words within a transcript. But remember, this doesn't always capture the depth of a conversation as it doesn’t consider the context in which words are used.

- Text Classification: This involves categorizing text into predefined groups based on certain criteria or patterns, helping distinguish different topics within a transcript. Machine learning techniques can be used in this classification, where a model is trained to classify text based on past examples.

- Topic Modeling: An advanced technique, topic modeling employs algorithms to identify the main themes within a document/set of documents. Latent Dirichlet Allocation (LDA) is a popular method where each document is presumed to be a mixture of topics, and each topic, a combination of words. By applying LDA, you can identify key topics and relate words in a transcript.

A detailed understanding of these methodologies and their application helps unlock the full potential of topic detection in transcription. Stay tuned for our upcoming segment where we will dive deeper into the practical application of these techniques. FasterCapital's Guide to NLP Topic Detection offers an in-depth examination of topic detection methodologies and their wider applications.

Step-by-Step Guide to Create Topic Detection for your Transcript

Here's the straightforward, seven-step guide to creating "Topic Detection" with TranscribeTube:

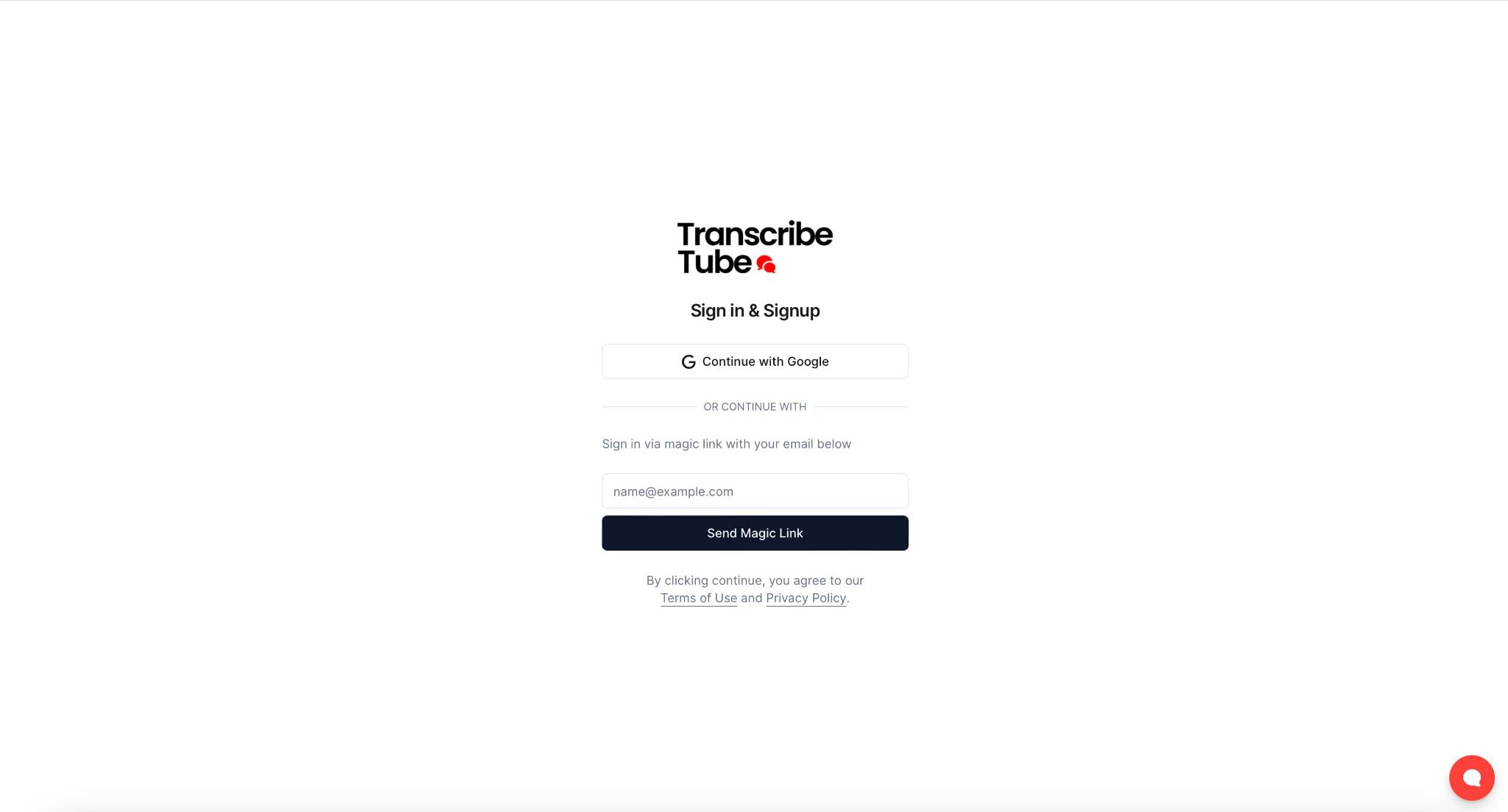

Sign up on Transcribetube.com

Start by signing up on TranscribeTube. As a welcome gift, new users are provided with a free transcription time, an excellent opportunity to explore the service.

On the home page of TranscribeTube, locate the 'Sign Up' button and follow the on-screen instructions to create your account.

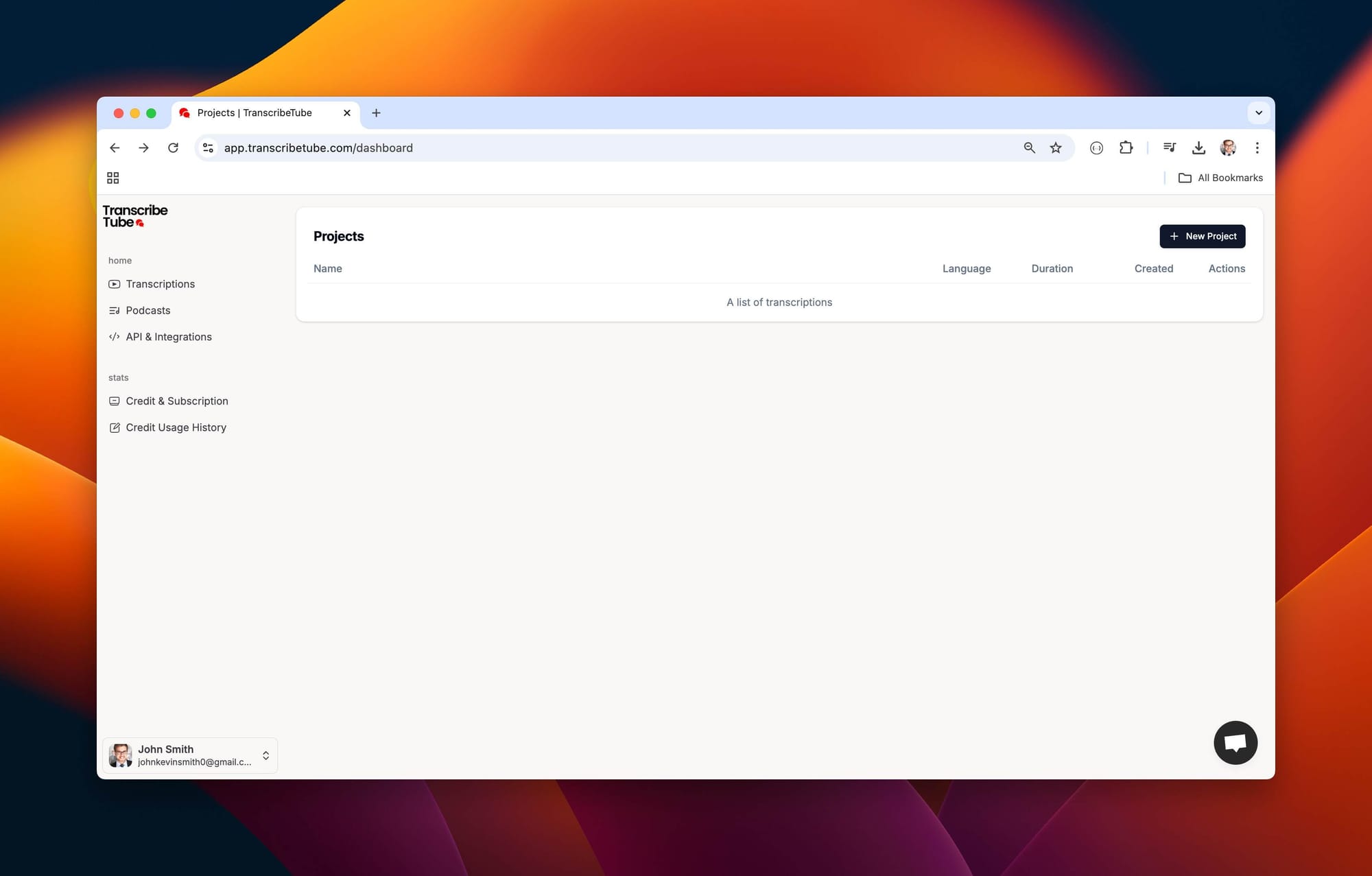

1) Navigate to dashboard.

Once you're logged in, it's time to transcribe your first video.

How to: Navigate to your dashboard, you can see a list of transcriptions you made before.

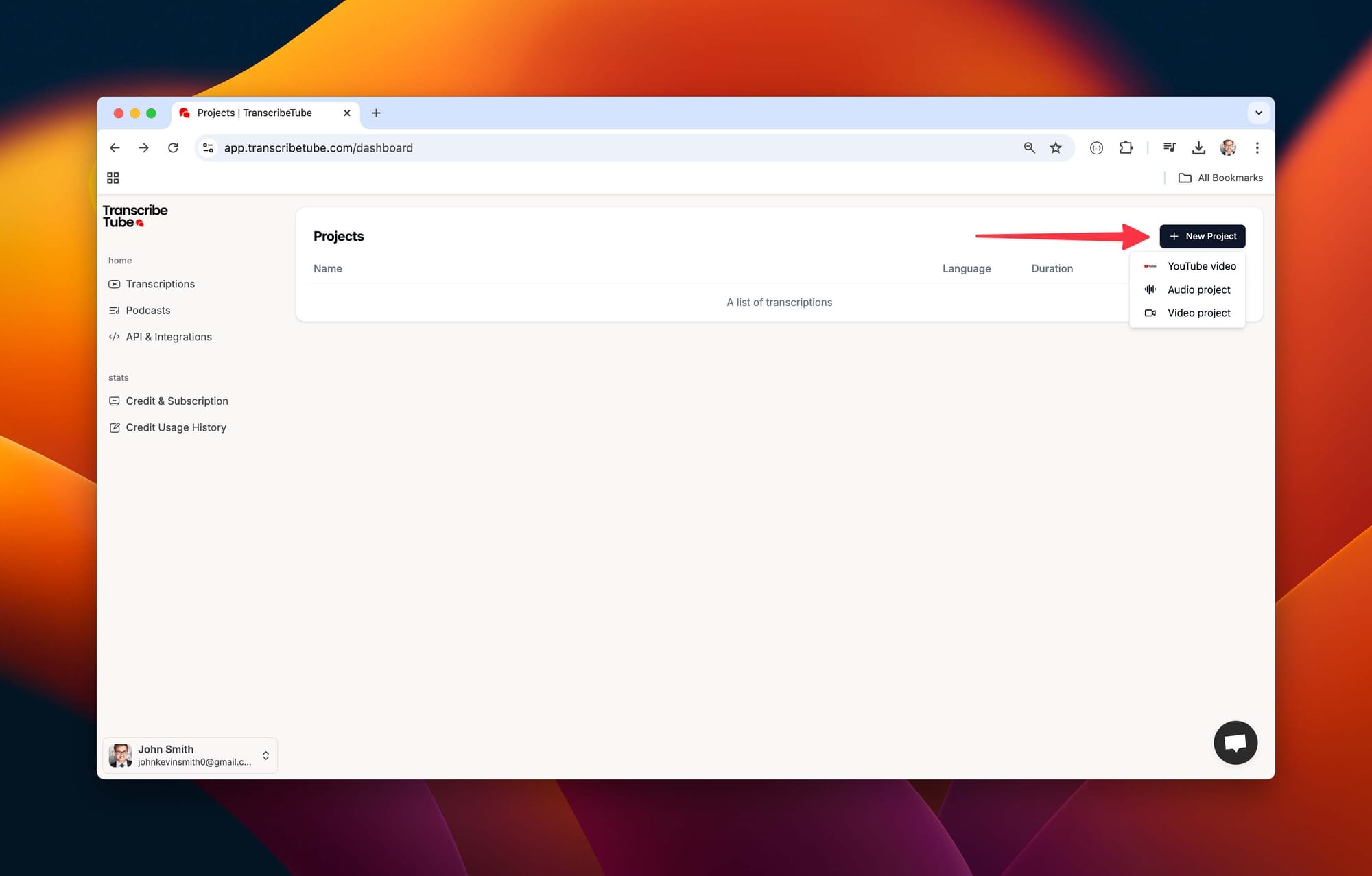

2) Create a New Transcription

Once you're logged in, it's time to transcribe your first video.

How to: Navigate to your dashboard, click on 'New Project,' and select type of the file of recording you want to transcribe.

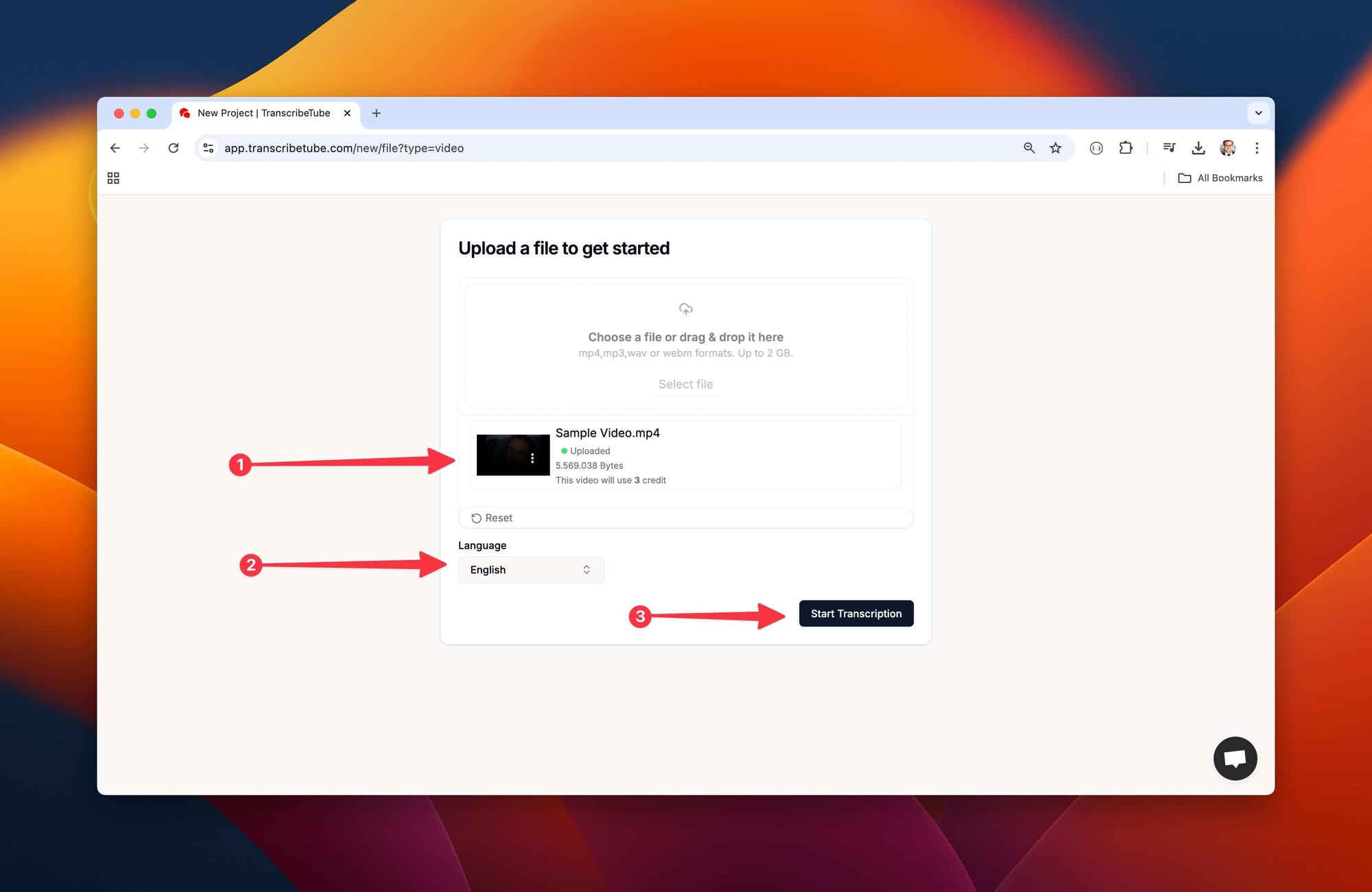

3) Upload a file to get started

After you select the type of file you want transcribe, upload it tool to start transcription process.

How to: Simply drag or select your file that you want to describe and then choose language you want for transcript.

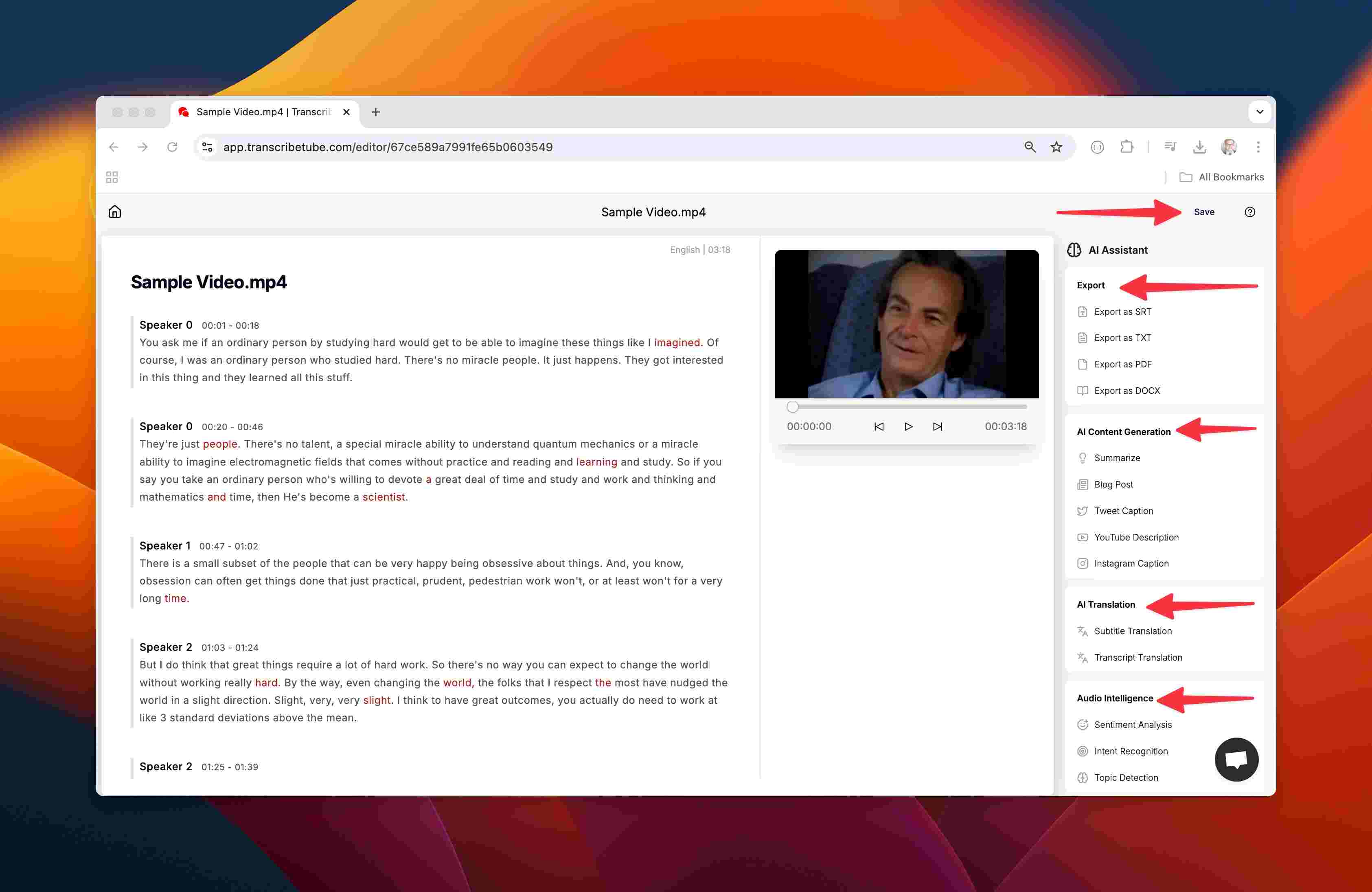

4) Edit Your Transcription with

Transcriptions might need a tweak here and there. Our platform allows you to edit your transcription while listening to the recording, ensuring accuracy and context.

You may also export transcript in different file options, and also many options using AI is possible.

After all your work done, you may save your transcript from upper right corner.

5) Start Topic Detection

How to: By clicking "Topic Detection" from bottom right corner.

6) Create Intelligence

How to: If your file does not have audio intelligence, our special AI tools will help you to create it.

7) Final Output

How to: Your Sentiment Analysis, Intent Recognition and Topic Detection is now ready to use.

Understanding Topic Detection From Transcription

Harnessing the power of topic detection from transcription necessitates grasping the foundational concepts of transcription and topic detection. This section will unpack these concepts and their connection in offering valuable insights.

Defining Transcription

Transcription involves converting spoken discourse into written text, a practice prevalent across several domains, including:

- Podcasts: Where discussions are transcribed to facilitate accessibility and content repurposing.

- Interviews: Transcriptive documentation of conversations for analysis and reporting.

- Meetings: Establishing records to ensure accountability and track follow-up actions.

By transforming audio content into text, transcription permits an efficient way to make spoken content both searchable and analyzable.

Understanding Topic Detection

Topic detection refers to the process of scanning text to identify main subjects or themes. Cutting-edge algorithms and techniques are deployed to discern patterns in content, and the applications of topic detection vary, encompassing:

- Data Analysis: It can help in extracting insights from extensive datasets.

- Content Marketing: Strategies can be tailored based on detected themes.

- SEO (Search Engine Optimization): Visibility of content can be enhanced by focusing on relevant topics.

The ultimate goal of topic detection is to condense voluminous information into manageable insights that can drive decision-making.

Bridging Transcription and Topic Detection

The integration of transcription and topic detection offers a powerful combination for deeper analysis of unstructured audio content. Here's how these two elements intertwine:

- Transforming Speech to Text: Transcription facilitates the conversion of spoken words into a format that's ripe for analysis.

- Employing NLP Techniques: Post transcription, natural language processing (NLP) techniques can be applied to extract overarching themes from the text. For example, models like Latent Dirichlet Allocation (LDA) can identify prevalent topics from the transcribed content.

As we venture further into the blog post, we'll explore topic detection's significance in contemporary workflows, offering an understanding of how it enhances content strategies, streamlines research, and fosters improved user experiences. Furthermore, we'll dive into methodologies to effectively implement topic detection from transcription, providing you with handy tools and tactics to enhance your data analysis efforts.

For a deeper understanding and practical details, consider exploring, AssemblyAI's Topic Detection Doc.

Importance of Topic Detection in Modern Workflows

In today’s rapidly evolving digital landscape, deriving meaningful insights from audio and video content has become vital. Topic detection from transcription contributes significantly to enhancing workflows across diverse sectors. This section delves into how effective topic detection can catalyze notable improvements in content strategy, research efficiency, and user experience.

Content Strategy Enhancement

One of the salient benefits of topic detection is its capacity to amplify content strategy. By spotting recurring themes within transcribed content, organizations can fabricate targeted materials that resonate more effectively with their audiences. Here’s how this can be achieved:

- Identifying Trends: The analysis of multiple transcriptions can reveal trends and regular topics relevant to audiences. This information can guide content creation, ensuring materials align with the interests of audiences.

- Tailored Messaging: Comprehending key themes allows for the tailoring of marketing messages, making them more engaging and relevant. For example, if a podcast frequently discusses sustainability, marketing campaigns can be created to align with this theme, thereby increasing audience engagement.

By employing topic detection, organizations can design more focused content strategies that not only attract but also retain their audience.

Streamlined Research and Analysis

Another considerable advantage of topic detection is streamlining research and analysis processes. Considering the substantial volume of audio and video content available today, locating relevant information can be time-consuming. Here’s how topic detection can assist with this:

- Quick Access to Relevant Sections: Rather than manually trawling through hours of recordings, data analysts can employ topic detection algorithms to swiftly locate pertinent sections within transcriptions. This time-saving efficiency allows analysts to center their efforts on deriving insights rather than combing through data.

- Enhanced Data Organization: By categorizing transcribed content based on identified topics, structured databases can be created that simplify information retrieval, greatly beneficial to research teams needing to reference specific discussions or points made during interviews or meetings.

Streamlining research procedures through effective topic detection not only boosts productivity but also heightens the overall quality of analysis.

Improved User Experience

Finally, the implementation of topic detection significantly contributes to improving user experience across varied platforms such as podcasts, webinars, and online courses. Here's how:

- Better Search Functionality: When searchers use platforms that implement topic detection, they are more likely to find relevant, specific content swiftly, enhancing user satisfaction and fostering continued engagement with the platform.

- Personalized Recommendations: An understanding of users' interests through detected topics can be utilized to recommend personalized content. Consequently, user retention is improved as the listener gets content aligned with their preferences.

- Content Accessibility: Transcribed content, clear in its topic delineation, eases the navigation process for users - a crucial aspect when considering educational platforms allowing learners to locate specific topics or lectures swiftly.

To summarize, seamlessly integrating topic detection from transcription into contemporary workflows can greatly enhance organizational efficiency and notably improve the user experience. As we proceed in this blog post, we will probe deeper into various approaches essential for effectively implementing topic detection, ensuring you are armed with the necessary tools to leverage this potent capability in your data analysis efforts.

Approaches to Topic Detection From Transcription

In the realm of topic detection from transcription, there are several approaches to consider, each with unique advantages and challenges. In this segment, you will find an overview of different methods including manual versus automated identification, keyword extraction techniques, advanced NLP methods, and the use of pre-existing tools and APIs.

Manual vs. Automated Topic Detection

Manual topic detection encompasses human analysts studying transcriptions to identify key themes and topics, with the following pros and cons:

- Pros of Human-Based Analysis:

- Contextual Understanding: Humans are adept at interpreting nuance and context that may elude algorithms.

- Quality Control: Topic identification can maintain high accuracy by leveraging the experience of analysts.

Cons of Human-Based Analysis:

- Time-Consuming: Manual scrutiny of transcripts is labour-intensive, potentially leading to inordinate delay in data processing.

- Scalability Issues: As the volume of content escalates, solely relying on human analysis becomes less practical.

Conversely, automated topic detection deploys algorithms and machine learning models for a quick and efficient identification process.

Keyword Extraction Techniques

Keyword extraction is a fundamental strategy in topic detection. This technique initiates with identifying words or phrases within a transcribed text that bear significance. Fundamental techniques include TF-IDF (Term Frequency-Inverse Document Frequency) and Keyword Frequency Analysis.

However, these straight-forward methods aren't without faults. They might lack understanding of context, leading to potential misinterpretation of topics.

Advanced Natural Language Processing (NLP) Methods

Advanced NLP methods have been designed to counter the drawbacks of basic keyword extraction techniques. A popular method, known as Latent Dirichlet Allocation (LDA), has gained traction for aiding in topic modelling. This method operates on the assumption that documents comprise of combinations of topics, and that each topic is identified by a distribution of words.

In addition to LDA, advanced models like BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer) use deep learning techniques to help gain an understanding of context and relationships between words.

Pre-built Tools and APIs

Real-time tools and APIs can be manipulated by those desiring to implement topic detection without devising a custom solution. Various pre-established transcription and NLP toolkits can be utilized, such as Google Cloud Speech-to-Text, AWS Transcribe and Open-source Libraries.

Choosing the right approach depends on specific requirements, with speed and scalability factors potentially favoring automated methods, while nuanced understanding might necessitate manual analysis. The following section will offer a step-by-step guide on effectively extracting topics from your transcribed content.

For a practical guide on implementing APIs for topic detection, you can find a guide on using Rev AI's Topic Extraction API here.

Step-by-Step Guide to Extracting Topics From Your Transcribed Content

Extracting meaningful topics from transcribed content involves a systematic procedure that can significantly bolster your data analysis capabilities. Here's a handy step-by-step guide to help you extract topics effectively from your transcriptions.

Choose a Transcription Method

Begin your topic extraction process with the selection of an appropriate transcription method. Here are two key options:

Automated Transcription Tools: These tools leverage AI and machine learning algorithms to convert speech into text quickly. A few examples include:

- Google Cloud Speech-to-Text: Offers robust transcription capabilities with support for multiple languages.

- Rev AI: Known for high accuracy, it also allows for topic extraction through its API.

- TranscribeTube: It excels in transcribing voice records or YouTube videos into text with good accuracy.

- Descript: This tool marries transcription with editing capabilities, simplifying text refining post transcription.

Professional Transcription Services: For maximum accuracy, especially in complex or technical discussions, opting for human transcription services might be prudent. These services guarantee that nuances and context are maintained in the transcript.

Your choice depends on your specific needs—whether faster speed or higher accuracy is your priority.

Clean and Prepare Your Text

After transcribing your content, clean and prepare the text for analysis:

Removing Filler Words, Timestamps, and Formatting Errors: Eliminate extraneous elements that won't contribute to topic detection. This includes filler words like "um" or "uh," timestamps, and any formatting inconsistencies.

Ensuring Consistent Formatting for Better NLP Results: Standardize your text format (like consistent speaker labels or paragraph breaks) to facilitate better processing by NLP algorithms. Clean data enhances the accuracy of topic detection outputs.

Tools such as NVivo assist in refining and organizing transcriptions.

Apply Topic Detection Techniques

With your cleaned transcript ready, the application of various topic detection techniques could begin:

Running a Chosen NLP Model (LDA, Embedding-Based):

- Use models like Latent Dirichlet Allocation (LDA) for topic modeling, which identifies topics based on patterns of word co-occurrence.

- Meanwhile, models such as BERT or GPT capture the contextual relationships between words and could also be viable options.

Evaluating Topic Coherence and Adjusting Model Parameters: After running your selected model, assess how coherent the detected topics are. You may need to tweak parameters (like the number of topics) to boost relevance and clarity.

Resources like Rev AI’s Topic Extraction API offer hands-on examples of implementing these techniques systematically.

Interpret and Refine

The final step involves interpreting the results and refining your approach:

Reviewing Output to Ensure Relevance: Analyze the extracted topics to confirm they align with your research objectives. This may entail verifying whether the topics truly reflect the key ideas discussed in the original audio.

Iterating on the Model or Method for Improved Accuracy: If certain topics seem irrelevant or aren't clear, revisit your model parameters or consider trying different models. This iterative process is key to unveiling meaningful insights.

These steps equip you to extract valuable topics proficiently from your transcribed content, enhancing your analytical capabilities and informing your decision-making process. In the next segment of this blog, we'll share best practices and tips for effective topic detection to further optimize your workflow and outcomes.

Best Practices and Tips for Effective Topic Detection

To maximize the effectiveness of topic detection from transcription, implementing best practices is essential. Here are key strategies that can help you achieve more accurate and meaningful results in your data analysis efforts.

High-Quality Audio and Accurate Transcription

One of the foundational elements of effective topic detection is ensuring that you start with high-quality audio and accurate transcription:

- Importance of Accurate Source Data: The quality of your transcription directly impacts the accuracy of topic detection. Clear audio recordings with minimal background noise lead to better transcription outcomes. If the transcription is riddled with errors, the subsequent analysis will also be flawed. Investing in high-quality recording equipment and choosing reliable transcription services or tools can significantly enhance data quality.

For example, using tools like Zoom for virtual meetings can provide high-quality audio, while services like Rev or Trint can ensure accurate transcriptions.

Regular Model Updates

The field of natural language processing is rapidly evolving, with new models and techniques emerging frequently. To maintain the effectiveness of your topic detection processes, consider the following:

- Staying Current with Evolving Language and Industry Jargon: Language is dynamic, and terminology can change based on trends, industries, and cultural shifts. Regularly updating your NLP models ensures that they remain relevant and capable of accurately interpreting contemporary language usage.

This might involve retraining models with new datasets or incorporating recent advancements in NLP technology. Keeping abreast of developments through resources like Towards Data Science or arXiv can provide insights into the latest methodologies.

Human-in-the-Loop Validation

While automated systems are powerful, they are not infallible. Incorporating a human-in-the-loop validation process can significantly enhance the reliability of topic detection:

- Combining Machine-Generated Insights with Expert Review: After running your topic detection algorithms, having a subject matter expert review the results can help identify any inaccuracies or misinterpretations. This hybrid approach leverages the efficiency of machine learning while ensuring that nuanced understanding is preserved.

For instance, after extracting topics from a series of interviews, an analyst could validate the relevance and coherence of those topics against their knowledge of the subject matter. This step not only improves accuracy but also builds trust in the insights derived from automated systems.

Real-World Use Cases

Transcription sentiment analysis has numerous practical applications across various fields. Its wide spectrum of utility is displayed here, highlighting the potential ways organizations can leverage this technology to buttress their operations and strategies.

Podcast Summaries and Highlights

Podcast transcription enables creators to generate summaries and highlights, widening the scope of their content's reach. By using sentiment analysis on different podcast segments, creators can pinpoint key moments that resonate with listeners, enabling them to:

- Create Engaging Content: Highlights of emotionally charged moments can draw in more listeners and improve engagement, as described in this article on Fireflies.

- Enhance SEO: Transcripts offer text-based content that search engines can index, thereby improving content discoverability.

Webinar and Lecture Topic Indexing

Transcription plays a significant role in indexing webinars and lectures, making it easier for attendees to locate specific topics within recorded sessions. Advantages of this approach include:

- Improved Searchability: Transcribing webinars allows organizations to create searchable databases, letting users find relevant information quickly.

- Enhanced Learning Experience: A boon for students, they can revisit lectures efficiently. This ensures that they grasp crucial concepts without having to comb through entire recordings.

Market Research Interviews and Focus Groups

Transcribing interviews and focus group discussions from market research offer valuable insights into consumer sentiments and preferences. This method enables businesses to:

- Identify Trends: Sentiment analysis of transcriptions helps companies pinpoint emerging trends in consumer behaviour.

- Enhance Product Development: Feedback received from customers and processed through sentiment analysis can inform improvements and innovations in products.

Improved SEO and Content Strategy

Transcription boosts accessibility and upgrades a company's content strategy through enhanced SEO practices:

Creating Pillar Content Based on Identified Topics

Businesses can generate comprehensive pillar content that addresses key topics their audience finds interesting by analyzing sentiments and themes recurring in transcriptions. This content aids in establishing authority in specific areas while driving traffic to websites.

Enhancing On-Page SEO for Video and Audio Content

Including transcripts on web pages hosting video and audio content lets search engines index the text, significantly improving search visibility. Important strategies include:

- Keyword Optimization: Incorporating relevant keywords from the transcript into the webpage enhances SEO performance.

- User Engagement: Offering transcripts prompts users to engage with the content in various formats, catering to different user preferences.

In conclusion, transcription sentiment analysis has diverse real-world applications, from making podcasts more accessible to refining market research insights. Organizations that employ this technology can drive engagement, improve their SEO rankings, and refine their content strategies.

Measuring Success and ROI

Once you have incorporated topic detection from transcription into your operations, it becomes critical to evaluate its effectiveness and determine the return on investment (ROI). Measuring success allows you to identify avenues for improvement and justify the resources dedicated to this process. The following are key metrics to consider:

Tracking Improved Engagement and Retention

One prime indicator of success in content-driven sectors is user engagement:

- Better Navigation Leads to Longer Viewing or Listening Durations: When users can swiftly find relevant topics within your content, they are more likely to engage deeply with it. For instance, if a podcast leverages effective topic detection, listeners can navigate quickly to sections that interest them, resulting in longer listen durations. The rise in engagement not only boosts user satisfaction but also promotes repeat visits.

Consider using analytics tools that monitor user behavior, like Google Analytics or specialized podcast analytics platforms like Podtrac, to track this metric. Analyzing metrics like average listen time and session duration can provide insights into user engagement driven by topic detection.

SEO Metrics

Effective topic detection can considerably enhance the online visibility of your content:

- Improved Keyword Rankings and Organic Traffic: Identifying relevant topics and optimizing your content around them can improve your search engine optimization (SEO) outcomes. Clearly defined topics aid search engines in comprehending the context of your content, leading to superior keyword rankings.

For instance, if your transcriptions reveal that certain themes resonate with your audience, you can construct targeted blog posts or articles, incorporating these keywords. Tools like SEMrush or Ahrefs can help monitor changes in keyword rankings and organic traffic over time, shedding light on the effectiveness of your topic detection strategies.

Internal Efficiency Gains

It's not just the external metrics that matter, assessing the impact of topic detection on internal processes is also pivotal:

- Quicker Content Analysis and Reduced Manual Effort: The adoption of automated topic detection can considerably cut down time spent on manual analysis. By swiftly extracting crucial themes from transcriptions, teams can concentrate on deriving insights rather than perusing data.

To measure this efficiency gain, compare the time dedicated to content analysis before and after implementing topic detection. You might discover that tasks that previously took hours can now be performed in minutes, allowing resources to be allocated to more strategic initiatives.

Additionally, conducing surveys or feedback sessions with team members to gauge their experiences with the new processes is a good idea. This qualitative data can offer valuable insights into how topic detection has bolstered workflow efficiency.

Tracking these metrics—user engagement and retention, SEO performance, and internal efficiency gains—enables you to effectively measure the success of your topic detection from transcription endeavors. This assessment will not only help justify current investments but also shape future enhancements in your data analysis methodologies.

Future Trends in Topic Detection and Transcription

As we move ahead, the fields of topic detection and transcription continue to evolve, underscored by emerging trends set to redefine these processes. Here are some pivotal future trends to anticipate:

More Accurate ASR (Automatic Speech Recognition) Tools

The development of Automatic Speech Recognition (ASR) tools is a significant trend poised to enhance the precision and efficiency of transcription services:

Improved Accuracy: The incorporation of machine learning and deep learning algorithms into ASR systems is increasing their competence in understanding diverse accents, dialects, and speech patterns. Google's speech recognition technology, for example, has attained a word error rate rivaling human transcribers, which substantially reduces the reliance on manual corrections.

Real-Time Transcriptions: Future ASR tools are likely to provide real-time transcription capabilities, granting immediate access to spoken content during live events, meetings, and broadcasts. This feature can promote accessibility for individuals with hearing impairments and streamline communication in various settings.

The Rise of Multimodal Understanding (Video, Image + Audio)

Another intriguing trend is the growth of multimodal understanding, which amalgamates diverse forms of media—like video, images, and audio—into holistic analyses:

Enhanced Contextual Understanding: AI systems, by consolidating insights from multiple modalities, can yield richer contextual interpretations. For instance, a transcription tool analyzing video content hand-in-hand with audio can better comprehend the context of conversations by considering visual cues.

Wider Application Scope: This trend will open new application possibilities across various fields such as education, marketing, and entertainment. For instance, educational platforms could utilize multimodal understanding to present interactive learning experiences blending video lectures with transcriptions and visual aids.

More Context-Aware NLP Models

The progression of Natural Language Processing (NLP) models forms another critical trend steering the future of topic detection:

Context-Aware Models: Going forward, NLP models are expected to comprehensively understand context and language nuances, including the recognition of emotional tone, sarcasm, and cultural references—factors often crucial for accurate topic detection.

Integration with AI Technologies: As NLP models become more sophisticated, they will likely be woven seamlessly with other AI technologies, enabling enhanced capabilities like sentiment analysis and predictive text generation. This integration can improve the overall quality and relevance of detected topics.

In conclusion, the future of topic detection from transcription holds immense potential for upgrading accuracy, efficiency, and user experience across multiple industries. Staying informed about these trends and adapting to latest technologies can help organizations leverage topic detection to draw deeper insights from their audio content.

Conclusion

As we conclude our exploration of topic detection from transcription, it’s important to reflect on the key steps and benefits of this powerful process, as well as to encourage you to implement these strategies in your own work.

Throughout this blog post, we have covered several essential steps for effectively extracting topics from transcribed content:

- Choosing a Transcription Method: Whether utilizing automated tools or professional services, selecting the right transcription method is crucial for ensuring accuracy.

- Cleaning and Preparing Your Text: Properly formatting and refining your transcript sets the stage for effective analysis.

- Applying Topic Detection Techniques: Utilizing models like LDA or advanced NLP approaches allows for meaningful topic extraction.

- Interpreting and Refining Results: Reviewing and iterating on your output ensures that the detected topics are relevant and actionable.

The benefits of implementing these steps are significant, including improved content strategy, streamlined research processes, enhanced user experiences, and measurable ROI through increased engagement and efficiency.

FAQ Section

To further assist you in understanding topic detection from transcription, here are some frequently asked questions along with their answers:

1. What is topic detection from transcription?

Topic detection from transcription is the process of analyzing written text derived from spoken language to identify the main subjects or themes present in that content. This involves using natural language processing (NLP) techniques to extract meaningful insights from transcribed audio or video recordings.

2. Why is topic detection important?

Topic detection is important because it helps organizations and data analyzers streamline research, enhance content strategies, and improve user experiences. By identifying key themes in transcriptions, businesses can create targeted content, quickly locate relevant information, and provide better search and recommendation features for their audiences.

3. What are the main methods for detecting topics?

There are several methods for detecting topics in transcriptions:

- Manual Analysis: Involves human analysts reviewing transcripts to identify key themes.

- Automated Topic Detection: Utilizes algorithms and machine learning models, such as Latent Dirichlet Allocation (LDA) or advanced NLP models like BERT and GPT.

- Keyword Extraction Techniques: Includes traditional methods like TF-IDF and keyword frequency analysis.

4. How can I improve the accuracy of my transcriptions?

To improve the accuracy of your transcriptions, consider the following:

- Use high-quality audio recording equipment to minimize background noise.

- Choose reliable transcription services or automated tools known for their accuracy.

- Regularly review and clean your transcriptions to remove errors and inconsistencies.

5. What tools and software can I use for topic detection?

There are various tools and software available for topic detection, including:

- Transcription Services: Google Cloud Speech-to-Text, Rev AI, Trint.

- NLP Libraries: spaCy, Hugging Face Transformers.

- Topic Modeling Tools: Gensim (for LDA), NLTK (Natural Language Toolkit).

6. How do I measure the success of my topic detection efforts?

You can measure the success of your topic detection efforts by tracking several key metrics:

- Engagement metrics (e.g., watch times or listen durations).

- SEO performance (e.g., keyword rankings and organic traffic).

- Internal efficiency gains (e.g., time saved in content analysis).

7. Can topic detection be applied to other types of content besides audio?

Yes, topic detection can be applied to various types of content, including written documents, articles, social media posts, and more. The principles of analyzing text for key themes remain consistent across different formats.

8. How often should I update my topic detection models?

Regular updates to your topic detection models are essential to ensure they remain effective. It’s advisable to review and update your models at least annually or whenever there are significant changes in language usage or industry jargon.

Check other articles you may want to check:

Transcription Industry Trends & Statistics 2024

Why 70% of Podcasters Are Switching to AI Transcription—Trends You Can’t Ignore

The Future Is Now: 2023’s Must-Know Transcription Trends and Predictions

.jpg)